Scaling Sync Performance: A Customer Story

Reinhard Hafenscher

Reinhard Hafenscher

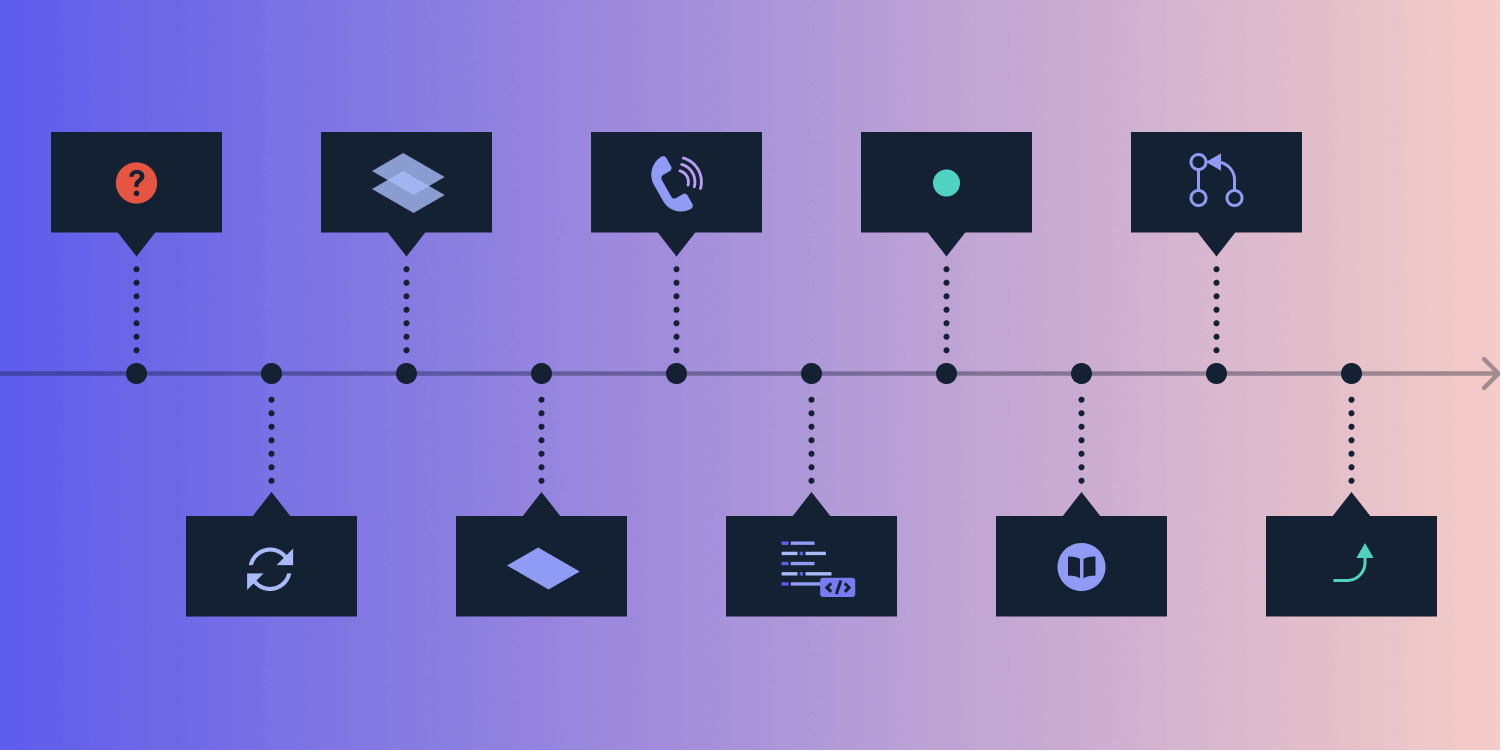

Today I want to share the tale of how a customer support request led to us improving Instant sync performance by 20 percent (as reported by the affected customer). For the sake of privacy, all names, dates, and numbers have been altered, but the relative timeline still matches reality. So, let’s start at the beginning.

June 7

ACME Inc., a customer that licenses our Instant product and uses it from the web, opened a customer support request. The company was concerned about the database (DB) load it was seeing — especially since, at this point, only a small fraction of its expected users were using the product.

Our first-level support team began triaging this request and started the process of gathering information, asking questions such as:

-

How are you using PSPDFKit Server?

-

Did the high load only appear recently?

-

How many PSPDFKit Server nodes are you running?

-

What does the configuration of those nodes look like?

June 8

ACME Inc. got back to us, and the ticket was escalated to the engineer on support, who happened to be me. The company went into detail on how it’s using PSPDFKit Server and Instant. The details aren’t important for the story, but the conclusion was the following:

-

It was making heavy use of Instant sync, with around 30 users annotating documents on approximately 150 documents concurrently. It also had plans to scale this significantly in the future.

-

The documents were deleted after use.

-

The company wasn’t using our Server-to-Server API much.

All of this was happening across 10 PSPDFKit Server instances and one rather beefy Postgres DB server.

The customer did send some logs, but unfortunately, they didn’t tell us anything useful.

So we asked even more questions:

-

Are you making use of layers?

-

Do all your users use the same layer per document?

-

How do the metrics look, especially around Instant sync?

-

Do you have any logging from the DB itself?

June 9

More information began coming in:

-

It was all happening on a single layer. This makes things easier for us, since it removes a whole world of interactions.

-

The basic DB logs showed nothing. This was expected, as by default, Postgres doesn’t log much.

At this point, since this was a critical issue for the customer, we scheduled a video call. Additionally, we asked ACME Inc. to enable more verbose logging, specifically for slow queries.

June 11

We had the call in the afternoon. The customer showed off all of its metrics, including listing all the SQL queries taking up time. One of them stuck out as taking the most time, so I made note of it for finding the source later. We also discussed scaling options (read replicas, Redis caching). The company emphasized that the current performance was OK, but it was worried about scaling.

Finally, we had something to work with. I took over the analysis and responsibility for drafting a way forward, including investigating other scaling options and how to integrate them with PSPDFKit Server.

June 14

This day was spent looking over meeting notes, checking the code, and reading documentation. There were a lot of options to consider and only a little time to do it.

The first order of business was determining where the heavy query we saw was actually called from. A quick investigation revealed that this query was called every time a new client started long polling for changes to the document. The issue is this query was very heavy:

Ecto.Query.from( l in PS.DB.Layer, where: l.id == ^layer_id, left_join: r in PS.DB.Record, on: r.layer_id == ^layer_id and r.mrev > ^remote_record_rev and l.rrev > ^remote_record_rev, order_by: [asc: r.crev, asc: r.created_at, asc: r.id], select: [ :id, :mrev, :rrev, records: [:id, :layer_id, :crev, :mrev, :content, :created_by, :updated_by, :group] ], preload: [records: r] )

Essentially, it tried to fetch all records (this is how we represent annotations and other objects in a PDF) that had changed since the last state the user knew (a number tracked in remote_record_rev).

This was bad for a multitude of reasons:

-

It’s slow, and even though all the relevant columns had indices (and I verified they were being used), we still needed to check the

recordstable, which contained large amounts of rows, since any single annotation, of all documents, across all layers, ends up in there. -

This query was called a lot — essentially, it was called every time long polling started, which at a base happens every 30 seconds per client, and more often if changes are actively being made, since long polling starts again after each change that’s sent to the client.

Getting rid of this query in the hot path seemed like an easy win, and with how our internal structure in regard to tracking changes was set up, checking the records table ended up being completely unnecessary, since if there were any pending changes, the layer already contained the information.

Looking through the list of slow queries, I also spotted a second one that stuck out:

from( u in PS.DB.User, where: u.upstream_id == ^upstream_id, select: u.id ) |> PS.Repo.one() |> case do nil -> upsert_upstream_id(upstream_id) user_id -> user_id end

This is due to how we handled users at that time: On the public API, you could specify any string as the user ID. Internally, we stored the mapping to a simple integer so we could have consistent storage use no matter how the supplied user IDs looked. Sadly, this meant that for almost every request, we had to check the DB for the mapping — hence why this query popped up.

At this stage, I also analyzed what code changes it would take to add support for read replication, but I was confident that addressing the above two mentioned issues would get us to the performance we needed.

June 15

I presented my findings to our Server team lead and proposed that we use the layer revision to determine if we needed to fetch records, and to introduce a memory cache for user IDs, since once a mapping is added, it’s permanent. Doing this would ensure that keeping the cache consistent would be easy.

After getting the signoff, I started implementation and managed to get it all done that same day. Tests were still green, so I was confident that these changes didn’t modify the behavior of the code, but I was lacking confidence in the code changes actually positively affecting performance.

June 16

With the fix working, I needed a way to verify the performance impact. I remembered looking into Locust when doing the first round of load testing for PSPDFKit Server. While it wasn’t a good fit for those tests, it would be perfect here.

I quickly got to work reading through the documentation and setting up a Locust file to test the Instant sync performance. The workflow we wanted to test was quite easy:

-

Each simulated user picks a random document.

-

Then, they call the long polling endpoint to wait for changes to the document.

-

Or, they create a new annotation in the document.

This way, I could easily compare how many users we could support before and after my changes. I won’t go into detail about how the Locust file looks, as it’s just a Python file doing a bunch of HTTP requests.

The point is, based on my limited testing, it worked, and with the changes applied, I could have measurably more users doing things when compared to before.

June 23

The fix was merged to master and backported to our latest release so we could build a nightly for the customer. After some basic QA, we finally shipped the nightly to the customer for testing.

June 29

The customer came back to us reporting that it measured sync performance, which was 20 percent better than before. The fix we implemented was a success, and the customer was happy. At this point, all that was missing was to ship a full release that included this fix.

July 9

We shipped a full release of PSPDFKit Server containing the fix, and with that, the customer issue was handled.

Conclusion

In total, it took just over a month from the initial report of the customer to having released a new version with better performance. And this was a larger task; for simpler requests, our turnaround times are usually even faster.

While a situation like this is exceptional, I think it highlights one of our core values very well. Here at PSPDFKit, it’s all about our customers and how we can best help them — be that building great products, helping them get up and running, or tackling the hard issues that prevent them from scaling up.

If you want to learn more about how we handle support, take a look at our other blogs on the topic: