How We Use Property Testing

Reinhard Hafenscher

Reinhard Hafenscher

Testing is probably one of the most important parts of software development. It aids in preventing regressions, since if a refactoring breaks things, you’ll have a test yelling at you that it’s broken. It also helps you write more maintainable code, since often, testability forces you toward cleaner implementations. And if you practice test-driven development, it even regularly helps you understand what needs to be done on the implementation side.

None of this is particularly new or interesting; almost everyone is familiar with the xUnit style of testing. A summary of how to write regular xUnit tests would be:

-

Include a set of preconditions that need to hold true for the test to make sense (optional).

-

Execute the code that needs to be tested.

-

Assert that the result is correct.

A barebones Elixir example could look like this:

defmodule SuperMathTest do use ExUnit.Case, async: true test "max/2 returns the largest number" do # Precondition — None for this test. # Test — We get the maximum of two numbers. result = SuperMath.max(2, 1) # Assertion — The largest number is returned. assert result == 2 end end

For a test this simple, you usually don’t need to split the test and assertion part into two, but I find that this structure helps write more readable tests as you test more complicated code.

With this introduction to testing out of the way, let’s look at the namesake of this article, property tests.

What Are Property Tests?

We all know what regular tests look like. What makes property tests different? When writing regular tests, you usually come up with specific examples of how the code should work and write them down. In the basic example from above, we tested our SuperMath.max/2 function by passing in 1 and 2 and asserting that the larger number — 2 — is returned. This would be one example, but what about if we pass the numbers in the opposite order, what if we pass the same number twice, or what if one number is negative? We can create tests for all these scenarios manually:

defmodule SuperMathTest do use ExUnit.Case, async: true test "max/2 returns the largest number if it's the first parameter" do # Precondition — None for this test. # Test — We get the maximum of two numbers. result = SuperMath.max(2, 1) # Assertion — The largest number is returned. assert result == 2 end test "max/2 returns the largest number if it's the second parameter" do # Precondition — None for this test. # Test — We get the maximum of two numbers. result = SuperMath.max(1, 2) # Assertion — The largest number is returned. assert result == 2 end ... end

And if you’re a bit more advanced, you might consider just specifying the inputs and using a loop to create the test cases:

defmodule SuperMathTest do use ExUnit.Case, async: true for {a, b} <- [{1, 2}, {2, 1}, {1, 1}, {2, -2}] do test "max/2 returns the largest number given #{a} and #{b}" do a = unquote(a) b = unquote(b) # Precondition — None for this test. # Test — We get the maximum of two numbers. result = SuperMath.max(a, b) # Assertion — The largest number is returned. assert result == max(a, b) end end end

But this still relies on you having all the possible combinations in your head. For this specific function, the space of possibilities is small enough that you could feasibly cover it all manually, but what about much more complicated functions?

This is where property testing comes in. Property testing allows you to specify in general terms what inputs you want, and it automatically generates many more test cases than you could ever come up with manually.

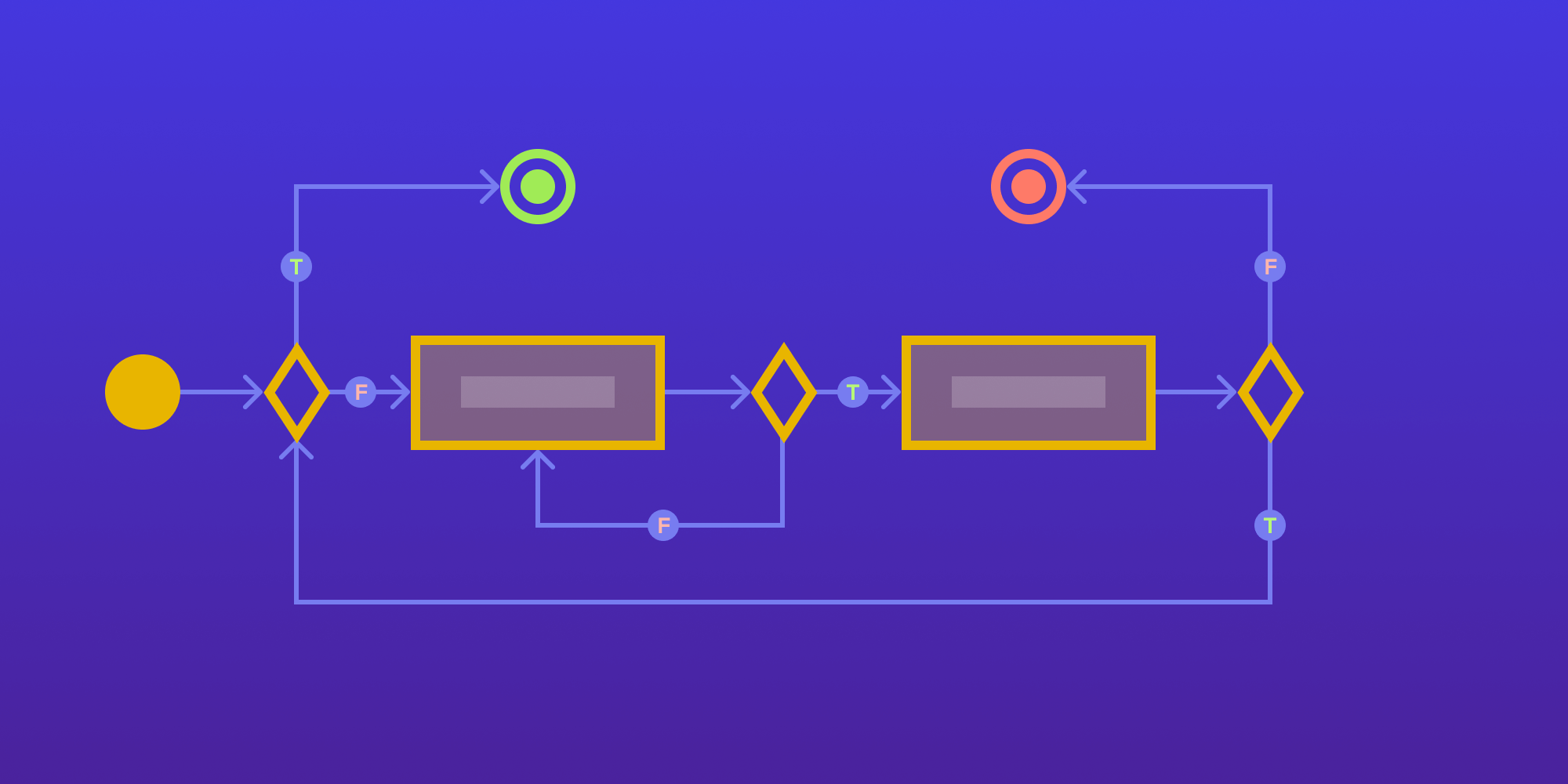

Let’s look at the outline of property tests to compare them to the regular way of testing:

-

Describe the valid inputs.

-

Check that the inputs fulfill some preconditions.

-

Run your code with the generated inputs.

-

Check that the output is what’s expected.

The specific syntax and available options depend on the library you use for your property testing. Let’s look at how it works in Elixir.

How Do Property Tests Work in Elixir?

In Elixir, you can use the StreamData package, which provides tools to write property tests. It contains helpers to generate streams of random data, along with some macros to allow you to more easily write property tests. Let’s look at how our previous test looks as a property test:

defmodule SuperMathTest do use ExUnit.Case, async: true use ExUnitProperties property "max/2 returns the largest number" do # Describe valid inputs. check all a <- StreamData.integer(), # You can also use `integer()`, as `StreamData` will be imported. b <- StreamData.integer() do # Test — We get the maximum of two numbers. result = SuperMath.max(a, b) # Assertion — The largest number is returned. assert result == max(a, b) end end end

Note the use of StreamData.integer(), which generates a random integer. There are helpers for other data types you commonly use that can be combined to generate any data you might need. I’ll show some more advanced examples of this later. For now, I want to discuss some other things this gives us:

-

Great coverage of the input space. Since this generates many different combinations of inputs, we can be sure that as many edge cases as possible are covered. This becomes especially true as we go beyond just inputting two integers and generating more advanced inputs.

-

StreamDatais shrinkable. What does this mean in the context of property testing? It means that if a test case that’s generated fails, the inputs will automatically shrink to the smallest input that still triggers the failure. In the case of numbers, this could mean going toward0, and in the case of lists, it could mean trying with a shorter list of elements. Having the smallest possible case to reproduce errors is immensely helpful, as it makes debugging and fixing test failures much easier. -

Test failures include the generated values, so you can easily extract them into a standalone test that reproducibly fails — both for debugging, and if a particularly interesting case that you always want to run is found.

Example Time!

Let’s look at this incorrect implementation of SuperMath.max/2:

defmodule SuperMath do def max(a, b) do a end end

Running the test from before reveals the following output:

1) property max/2 returns the largest number (SuperMathTest)

test/super_math_test.exs:5

Failed with generated values (after 6 successful runs):

* Clause: a <- StreamData.integer()

Generated: 2

* Clause: b <- StreamData.integer()

Generated: 3

Assertion with == failed

code: assert result == max(a, b)

left: 2

right: 3Things that immediately jump out:

-

after 6 successful runs— despite being wrong, our implementation worked for a bit. Luckily, property testing doesn’t give up so easily. -

The generated values are shown, and we can see that

2is returned instead of3. This makes you wonder if those were the values that initially triggered the failure.

Let’s have a look at what values were generated to trigger the failure to see the shrinking in action:

"a: 1 b: 0" # good "a: 2 b: -2" # good "a: 3 b: 0" # good "a: -1 b: -5" # good "a: 3 b: 0" # good "a: 2 b: 5" # bad "a: 2 b: 0" # good "a: 2 b: 3" # bad "a: 2 b: 0" # good "a: 2 b: 2" # good "a: 2 b: 4" # bad "a: 2 b: 0" # good "a: 2 b: 2" # good "a: 2 b: 3" # bad "a: 2 b: 0" # good "a: 2 b: 2" # good

You can see the mentioned six successful runs until the test finally failed at a: 2 b: 5. Notice how even after it failed, it still kept trying different combinations to simplify the failure case. While, in this case, the difference between a: 2 b: 5 and a: 2 b: 3 as the reported minimal failure isn’t massive, this same approach is used when you generate lists of data, meaning if the property testing framework finds an error in a list of 100 elements, it’ll try to find the smallest list that still causes an error.

If this all sounds too good to be true, don’t worry; in the next section, I’ll discuss some limitations and outline when not to use property tests.

When Not to Use Property Tests

As great as they are, property tests shouldn’t be used for everything. Where they really excel is when testing code where the input space is large and where validating the result is easy. Since I think this is very important, let me just drive it home:

-

The input space needs to be large.

-

Validating the result needs to be easy.

Next, let’s look into why this is important.

Large Input Space

Imagine your product offers multiple storage backends — like Postgres, S3, and local file storage — and you want to test the migration between each of those. Since you can’t migrate from a storage backend to itself, that leaves only six combinations of migrations to test. This could easily be captured using the loop approach shown above. In fact, writing this as a property test probably would be harder since you’d have to capture the condition of the two backends not being equal:

defmodule StorageMigrationTest do use ExUnit.Case, async: true use ExUnitProperties # Written as a property test. property "check all migrations" do # We can define the generator for all possible backends once. storage_backend = StreamData.one_of([ StreamData.constant(:s3), StreamData.constant(:postgres), StreamData.constant(:local) ]) check( all( {backend_a, backend_b} <- StreamData.filter( StreamData.tuple({storage_backend, storage_backend}), fn {backend_a, backend_b} -> # We need to filter out all generated options that try to migrate to the same backend. backend_a != backend_b end ) ) ) do # Run your migration test from a to b. end end # Written as a parametrized test. for {backend_a, backend_b} <- [ {:postgres, :s3}, {:postgres, :local}, {:s3, :postgres}, {:s3, :local}, {:local, :s3}, {:local, :postgres} ] do test "it successfully migrates from #{backend_a} to #{backend_b}" do # Run your migration test from a to b. end end end

In my opinion, option two is much neater. Since the input space is so small, we can list all permutations explicitly, thereby making it more readable. It doesn’t run any combinations twice like our property test, and it leads to much neater test output, since each case gets its own description:

MigrationTest [test/migration_test.exs] * property check all migrations (9.4ms) [L#5] * test it successfully migrates from postgres to local (0.00ms) [L#36] * test it successfully migrates from local to s3 (0.00ms) [L#36] * test it successfully migrates from s3 to local (0.00ms) [L#36] * test it successfully migrates from postgres to s3 (0.00ms) [L#36] * test it successfully migrates from local to postgres (0.00ms) [L#36] * test it successfully migrates from s3 to postgres (0.00ms) [L#36]

The other time property tests aren’t great is when checking correctness is hard.

Validation

You might have noticed in the first example where we tested the SuperMath module that we used the Kernel.max/2 function to validate the result of our max implementation being correct. This is a common pattern with property testing. It really only works if, when given an arbitrary input, validating the correctness of your tested code is easy. That means that any test where validating the result would essentially require reimplementing the function being tested isn’t suitable for property testing. Here’s an example of what I mean:

defmodule SuperMath do def cool_number(a) do if a > 10 do a * 3 else a * 2 end end end

Here we have cool_number/1. This function takes a number and returns a cool number. The issue with property tests here is that the results don’t share any common properties that we can assert on. We can’t:

-

Assert on the result being bigger than the input, since in the case of negative numbers, that wouldn’t hold true.

-

Assert that the output is different than the input, since for

0, that wouldn’t hold true. -

Assert that the result is odd or even.

In short, the output doesn’t share any common characteristics we can verify the correctness of, so what do we end up with for a test? Let’s see:

defmodule SuperMathTest do use ExUnit.Case, async: true use ExUnitProperties property "cool_number/1 works" do check all(a <- StreamData.integer()) do # Test — We get a cool number. result = SuperMath.cool_number(a) # Assertion — A cool number is returned. if a > 10 do assert result == a * 3 else assert result == a * 2 end end end end

Yup, we had to essentially reimplement the function here to verify that the output of each input is correct. In cases like these, you have a few options:

-

Move the decision on whether to multiply by two or three up a level, and test the multiplication separately now that it can be captured in a property test.

-

Choose a few examples that sufficiently cover all branches and go with regular unit tests.

Of course, this is a very simplistic example, but if you find yourself having to reimplement your logic in your property tests, if often means that you’re either trying to test too much at once, or that your code isn’t very testable to begin with.

With all this out of the way, let’s look at a few examples directly from our work where we employed property tests with great success.

Real-World Examples

Let’s start with PSPDFKit Server. One area where we used property tests is with our Collaboration Permissions feature.

PSPDFKit Server

Here are a few examples of how we used tests to reduce how much code we had to write:

property "parse/1 with a single permission" do check all(p <- permission_string()) do assert {:ok, permission} = Collaboration.parse(p) assert permission.content_type in [:annotations, :comments, :form_fields] assert permission.action in [:view, :edit, :delete, :fill, :set_group, :reply] assert valid_scope?(permission.scope) end end

This first property test is designed to check that any valid permission string can be parsed. It does this by using the custom permission string generator we built, and then we parse the strings — which are all supposed to be valid — and check that they contain the right data. We still have specific test cases for the parse to check that each specific value is mapped correctly, but this allows us to check if there are any unexpected combinations that would lead to errors.

We also employed property tests to ensure our serialization/deserialization logic works correctly:

property "deserialize/1 is an inverse of serialize/1" do check all(permissions <- permissions(), user_id <- user_id()) do filter = ContentFilter.from_permissions(permissions, user_id) serialized = ContentFilter.serialize(filter) assert ContentFilter.deserialize(serialized) == filter end end

By using our permissions() and user_id() generators, we can check that any arbitrary permission can be serialized and deserialized without losing any information.

A note on our custom generators — we aren’t doing anything special here; they’re just stringing together functions that StreamData already provides. Here’s an example:

@doc """ Generates a valid collaboration permission. """ def permission do bind(permission_string(), fn ps -> {:ok, cp} = PS.Permissions.Collaboration.parse(ps) constant(cp) end) end @doc """ Generates a valid collaboration permissions string. """ def permission_string do bind(tuple({content_type(), action_string(), scope_string()}), fn {content_type_string, action_string, scope_string} -> constant(Enum.join([content_type_string, action_string, scope_string], ":")) end) end def content_type_string() ...

Here, we decomposed the generation of a collaboration permission struct by generating the separate components and joining them together.

Now, let’s look at some examples of our newest product, PSPDFKit API.

PSPDFKit API

One of the more interesting challenges we had to tackle was that we wanted to inspect multipart form data requests in a streaming fashion. For this, we had to build our own state machine for parsing multipart requests. Since this lies in the critical path of our main build API, making sure it was working correctly was of the utmost importance. It also presented an ideal case for property tests:

-

The input space is enormous. Apart from the request itself, there are also the different chunk sizes, depending on network conditions we have to deal with.

-

Checking if things are correct at the end is easy. We know what we put in, and we can verify that that same content came out. We also know which functions were supposed to be called, so it’s easy to assert on them actually being called.

The full test is a bit too large, so here’s the test with some of the code replaced with functions:

property "multipart can be parsed when chunked" do check all( {chunk_size, parts} <- tuple( {chunk_size(), list_of( multipart(), min_length: 1, max_length: 50 )} ), max_runs: 50 ) do # We generate a multipart request containing all the specified parts. {headers, multipart} = parts # This will configure our `StreamHandlerMock` to expect to be called with the right data, given the parts. |> setup_handler_assertions() # This will generate the required headers and the actual content of the multipart request, given the parts. |> generate_request_data_from_parts() # Initialize our `StreamParser`. initial_state = StreamParser.initial_state( headers, default_parser_opts() ) # This will recursively send chunks to the `StreamParser` until the final response. {final_response, state} = call_next_chunk(multipart, 0, chunk_size, initial_state, <<>>) # Ensure that the returned chunk is unmodified. multipart_size = byte_size(multipart) assert %{ state: :looking_for_end_of_header, total_size_bytes: ^multipart_size } = state assert final_response == multipart # Ensure that our mock was called the correct amount of times. verify!(StreamHandlerMock) end end

This test does a lot, so let me walk you through some of the highlights:

-

The generated cases not only vary in the content that’s sent (generated by the

multipart()generator), but also in how many bytes each chunk we process contains. -

Our

StreamParsercan call functions on a suppliedStreamHandler, and we can set up a mock based on the generated parts to ensure that not only did we not break the data, but that we also interpreted it correctly and called the right functions at the right time. -

Finally, since the

StreamParsershould only pass the data through, asserting on correctness is as easy as checking that the input in the beginning equals the output at the end.

Conclusion

I could go on listing more examples of where we found property tests to be useful, but I think by now you’ll probably have made up your mind. Property tests are a great tool when you know how and when to use them — not only when using a functional language such as Elixir, but in all languages. I hope this article gave you an idea of where property testing makes sense and where a more standard approach is preferable, and that you’ll try it out yourself in your language of choice.